Samsung to Begin HBM4 Chip Production for Nvidia

Samsung plans HBM4 memory chip production for Nvidia next month, targeting AI accelerator demand while competing with SK Hynix in advanced memory supply.

Samsung plans HBM4 memory chip production for Nvidia next month, targeting AI accelerator demand while competing with SK Hynix in advanced memory supply.

TIME Magazine selected the CEOs driving the global AI race as its 2025 Person of the Year, highlighting their impact on technology, policy, and geopolitics.

Nvidia’s newest AI server accelerates mixture-of-experts models by 10x, leveraging 72 chips and high-speed interconnects, maintaining an edge over AMD and competitors.

OpenAI invests in Thrive Holdings, embedding teams into its portfolio companies to accelerate AI adoption. The move deepens OpenAI’s circular dealmaking strategy with partners that also invest in it.

AMD CEO Lisa Su dismissed fears of an AI spending bubble, saying Big Tech’s aggressive investments in computing infrastructure are essential to accelerating innovation and long-term growth.

Oracle Cloud Infrastructure will deploy 50,000 AMD Instinct MI450 GPUs beginning in 2026, underscoring the growing competition to Nvidia in the global race for AI computing capacity.

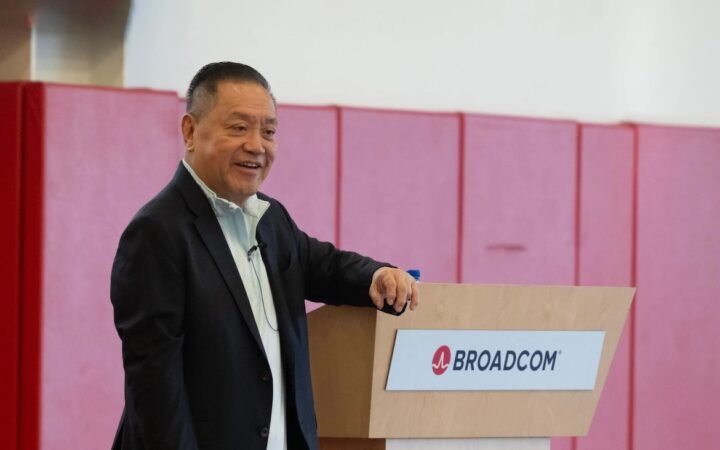

OpenAI has announced a strategic partnership with Broadcom to design and deploy 10 gigawatts of custom AI accelerators, expanding its hardware ecosystem beyond Nvidia and AMD.

OpenAI’s computing agreements have now surpassed $1 trillion, spanning deals with AMD, Nvidia, Oracle, and more – a milestone that underscores the capital intensity driving AI infrastructure expansion.

AMD and OpenAI have entered a multi-year, 6-gigawatt partnership to deploy AMD Instinct GPUs and build next-generation AI infrastructure, marking one of the largest compute collaborations in the industry’s history.

OpenAI has entered into a strategic agreement with AMD to supply high-performance AI chips, diversify its hardware sources, and build next-gen compute capacity.

IBM and AMD are joining forces with San Francisco–based Zyphra to build one of the world’s largest generative AI training clusters. Powered by AMD Instinct MI300X GPUs on IBM Cloud, the collaboration aims to accelerate Zyphra’s multimodal foundation models and advance open-source AI innovation.