AI Now Handles Chronic Prescription Renewals in Utah

Utah launches an AI pilot for prescription renewals, letting algorithms handle routine medication management without physicians, highlighting regulatory and safety challenges.

In artificial intelligence, a hallucination refers to an output generated by an AI model that appears confident and factual but is actually false or unsupported by real data. This phenomenon often occurs in large language models and generative systems when they fill gaps in knowledge or misinterpret training information. Hallucinations can take the form of incorrect facts, fabricated sources, or unrealistic images, depending on the AI application. They highlight one of the biggest challenges in AI development—ensuring reliability, accuracy, and verifiable outputs. Researchers and developers use techniques like model fine-tuning, retrieval-augmented generation, and human oversight to reduce hallucinations and build more trustworthy systems.

Utah launches an AI pilot for prescription renewals, letting algorithms handle routine medication management without physicians, highlighting regulatory and safety challenges.

Anthropic publishes Claude’s constitution, a detailed framework guiding AI behavior, ethics, safety, and helpfulness, available under Creative Commons for transparency and research.

OpenAI now uses age prediction to adjust ChatGPT’s safety settings for teens. Users 18 and older can verify their age to disable extra restrictions.

OpenAI is on track to unveil its first consumer device in the second half of 2026, signaling a major expansion beyond software as the company explores a new category of AI-native hardware.

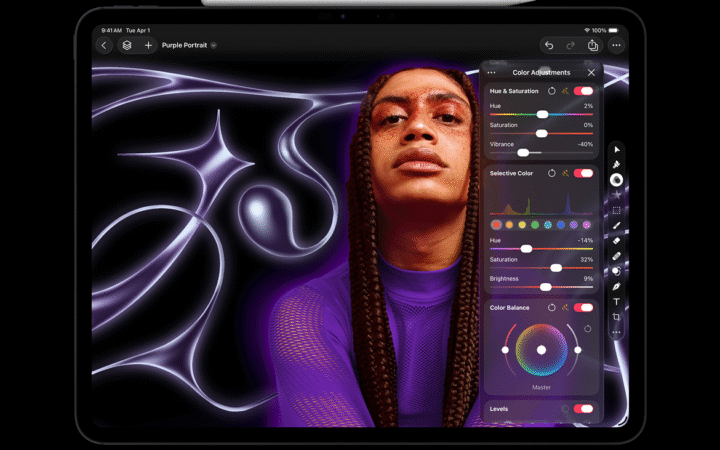

Apple introduced Apple Creator Studio, a subscription bundle combining professional creative apps with new AI-driven features across video, music, imaging, and productivity tools. The offering expands Apple’s push into intelligent software workflows for creators.

Apple will rely on Google’s Gemini models and cloud infrastructure to power future artificial intelligence features, including Siri. The multi-year partnership supports Apple’s next generation of foundation models.

The Association of Chartered Certified Accountants will discontinue remote exams from March 2026, citing rising misconduct linked to AI tools. Most candidates will return to in-person test centers.

Chinese generative AI startups MiniMax and Zhipu AI have disclosed their financials ahead of potential Hong Kong listings, highlighting modest revenues and mounting losses compared with U.S. peers.

Disney and OpenAI announced a three-year licensing deal allowing Sora to generate fan-inspired short videos featuring over 200 Disney, Marvel, Pixar, and Star Wars characters.

ChatGPT now reaches more than 800 million weekly users, creating a powerful adoption flywheel that is speeding the transition from AI experimentation to full-scale enterprise deployment, according to OpenAI’s new 2025 report.