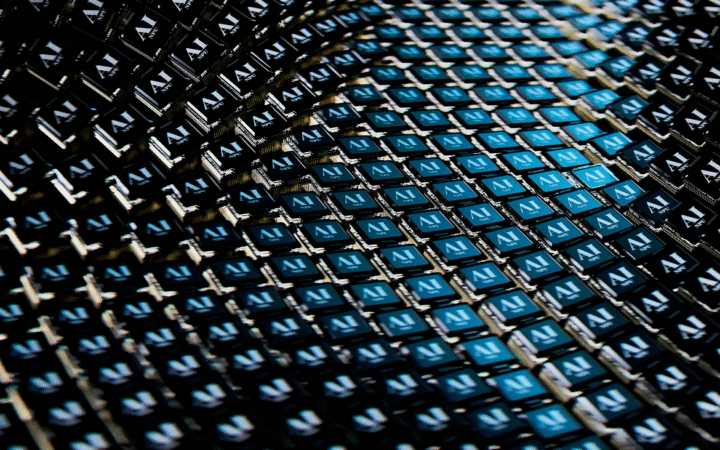

Cisco has rolled out a new networking chip, dubbed P200, that aims to unify distributed AI data centers under a single coherent infrastructure. The P200 is paired with a new router designed to span large geographic distances, enabling separated facilities to operate as part of a unified training system.

The design replaces what once required 92 individual chips with a single module and achieves up to 65% lower power consumption compared to comparable hardware. It addresses one of the biggest challenges in scaling AI: keeping data synchronized across locations without performance loss. Early adopters include major cloud customers such as Microsoft and Alibaba, who see the solution as crucial to handling large-scale AI workloads.

As AI models grow in size and complexity, training demands often require multi-site compute deployments. These compute hubs are frequently placed far apart due to power, cooling or land constraints. Cisco says the P200 chip and router help to stitch these disparate centers together in a tightly synchronized fashion.

Technical Innovations & Strategic Leverage

At the heart of Cisco’s offering is advanced buffering and data alignment logic. This enables large inter-data center bursts of traffic to flow without losing packet order or coherence, which is vital in AI training where model state must remain consistent.

Replacing 92 conventional chips with a single P200 module not only improves density but simplifies hardware design and management. The chip’s architecture streamlines routing logic and lowers energy costs, making it more practical to build wide-area AI systems.

For Microsoft, the chip offers a new tool to manage massive training jobs that span multiple data center sites. From Cisco’s perspective, the P200 gives it a stronger foothold in the AI infrastructure market and positions it against rivals like Broadcom, which have competing networking offerings.

Challenges, Market Impact & What’s Next

While the P200 is a bold technical leap, the path to adoption isn’t guaranteed. Integration with existing data center networks, ensuring end-to-end reliability, and guaranteeing consistent performance across wide-area links are difficult engineering problems.

The deal also underscores a shift in AI infrastructure dynamics: networking is becoming nearly as critical as compute in enabling large models. With hardware supply chains under stress, innovations that improve connectivity may become key differentiators.

Going forward, Cisco may push the P200 into more products and strike further partnerships with cloud and AI companies. As more organizations opt to distribute compute across geography, this chip could become a foundational element of future AI systems.