Amazon Web Services (AWS) announced expanded AI offerings at its AWS re:Invent conference, introducing new capabilities in Amazon Bedrock and Amazon SageMaker AI. The updates aim to simplify building and fine-tuning custom large language models (LLMs) for enterprise developers.

The cloud provider now offers serverless model customization in SageMaker, allowing developers to build models without managing compute resources or infrastructure. Users can engage with this feature via a self-guided point-and-click workflow or an agent-led interface using natural language prompts, currently in preview. The system supports AWS’s Nova models and select open-source models, including DeepSeek and Meta’s Llama.

Ankur Mehrotra, AWS general manager of AI platforms, explained that the tools can be used for domain-specific applications. “If you’re a healthcare customer and want a model to understand medical terminology, you can provide labeled data, select the technique, and SageMaker fine-tunes the model automatically,” he said.

Reinforcement Fine-Tuning in Bedrock

AWS is also launching Reinforcement Fine-Tuning in Bedrock, which enables developers to customize models using either a reward function or pre-set workflow. The platform manages the full model customization process from start to finish. These initiatives reflect AWS’s focus on frontier LLMs and enterprise-specific model customization.

Nova Forge, announced earlier in the week, provides fully managed custom Nova models for $100,000 annually. Mehrotra noted that differentiation through model customization is key for enterprises seeking unique AI solutions.

AWS AI Factories Bring AI On-Premises

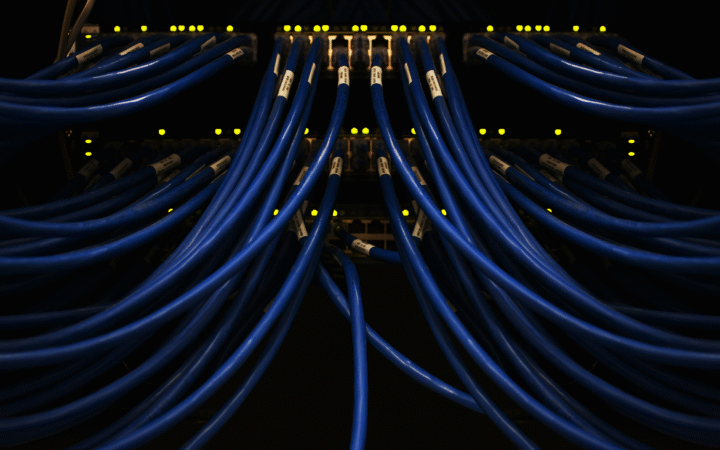

In a related development, AWS unveiled AI Factories, a service delivering dedicated AI infrastructure inside customer data centers. The offering combines AWS AI services, NVIDIA Trainium chips, high-speed networking, and storage to enable organizations to develop and deploy AI applications at scale without building infrastructure from scratch.

AWS AI Factories operate like a private AWS Region, providing managed access to AI tools, foundation models, and storage while leveraging customers’ existing power, network, and space. The service is designed for regulated industries and public sector organizations that require secure, low-latency AI environments. Partnerships with NVIDIA and projects such as the AI Zone in Saudi Arabia demonstrate the scale and performance of these on-premises deployments.

These combined innovations—customizable LLMs through SageMaker and Bedrock, managed Nova Forge models, and AI Factories—position AWS to compete more effectively with established enterprise AI providers while reducing operational complexity for large-scale AI adoption.