OpenAI co-founder Ilya Sutskever believes the AI industry is reaching a point where simply scaling computation is no longer enough, and that true progress now requires returning to deep scientific research.

Speaking on the Dwarkesh Podcast, Sutskever explained that in recent years, the AI industry has operated on a straightforward principle: to make a model smarter, give it more compute and more data. For a while, that approach worked — companies bought ever-larger numbers of GPUs, built massive data centers, and saw steady improvements. For business, this was an attractive strategy: low-risk, predictable, and clearly executable.

But, according to Sutskever, that formula has now been exhausted. Data is finite, and companies already possess enormous compute resources. He doubts that further scaling alone will transform the field:

“Do people believe that increasing scale by 100× will change everything? There will be differences, yes. But that everything will be completely transformed just by scaling — I don’t think so.”

He argues that the industry is entering a new phase — a return to genuine scientific inquiry — except this time, researchers have access to unprecedented computational power. Compute remains essential, he said, especially when everyone is operating within the same conceptual paradigm. However, what becomes decisive now is how that compute is used. And that is fundamentally a research problem.

The Core Scientific Challenge: Poor Generalization

Sutskever highlighted that today’s models still struggle with generalization, especially compared to humans. AI systems need large amounts of data and many examples to learn tasks that humans can grasp after seeing just one or two.

“Models generalize much worse than humans. This is absolutely obvious. And it’s a fundamental problem,” he said.

Because of this limitation, Sutskever believes that major progress in AI will no longer come from scaling alone, but from breakthroughs that improve the underlying science of learning and generalization.

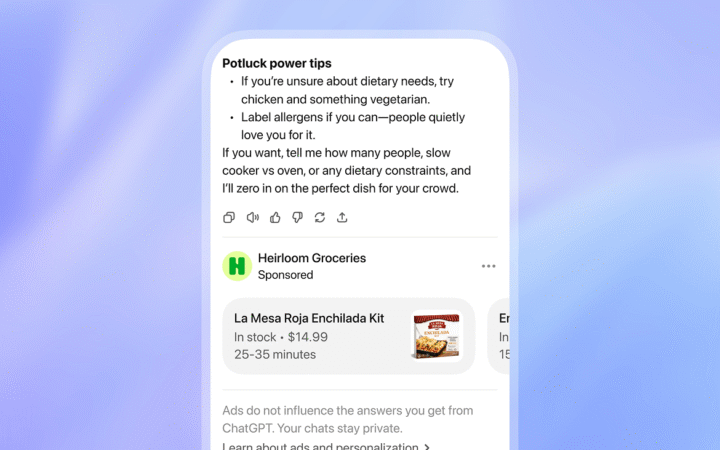

This perspective comes as forecasts suggest ChatGPT could have as many as 220 million paying users by 2030, positioning it among the world’s largest subscription services.